Self-managed Apache Airflow deployment on Amazon EKS

Introduction

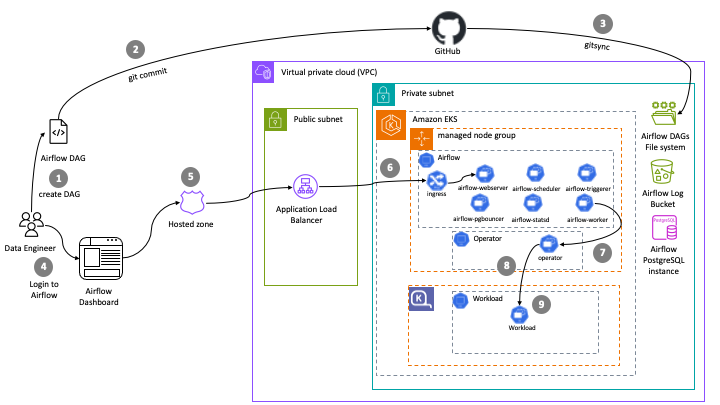

This pattern deploys self-managed Apache Airflow deployment on EKS. This blueprint deploys Airflow on Amazon EKS managed node groups and leverages Karpenter to run the workloads.

Architecture

This pattern uses opinionated defaults to keep the deployment experience simple but also keeps it flexible so that you can pick and choose necessary add-ons during deployment. We recommend keeping the defaults and only customize if you have viable alternative option available for replacement.

In terms of infrastructure, below are the resources that are created by this pattern:

-

EKS Cluster Control plane with public endpoint (recommended for demo/poc environment)

-

One managed node group

- Core Node group with 3 instances spanning multi-AZs for running Apache Airflow and other system critical pods. e.g., Cluster Autoscaler, CoreDNS, Observability, Logging etc.

-

Apache Airflow core components (with airflow-core.tf):

- Amazon RDS PostgreSQL instance and security group for Airflow meta database.

- Airflow namespace

- Kubernetes service accounts and AWS IAM roles for service account (IRSA) for Airflow Webserver, Airflow Scheduler, and Airflow Worker.

- Amazon Elastic File System (EFS), EFS mounts, Kubernetes Storage Class for EFS, and Kubernetes Persistent Volume Claim for mounting Airflow DAGs for Airflow pods.

- Amazon S3 log bucket for Airflow logs

AWS for FluentBit is employed for logging, and a combination of Prometheus, Amazon Managed Prometheus, and open source Grafana are used for observability. You can see the complete list of add-ons available below.

We recommend running all the default system add-ons on a dedicated EKS managed nodegroup such as core-node-group as provided by this pattern.

We don't recommend removing critical add-ons (Amazon VPC CNI, CoreDNS, Kube-proxy).

| Add-on | Enabled by default? | Benefits | Link |

|---|---|---|---|

| Amazon VPC CNI | Yes | VPC CNI is available as an EKS add-on and is responsible for creating ENI's and IPv4 or IPv6 addresses for your spark application pods | VPC CNI Documentation |

| CoreDNS | Yes | CoreDNS is available as an EKS add-on and is responsible for resolving DNS queries for spark application and for Kubernetes cluster | EKS CoreDNS Documentation |

| Kube-proxy | Yes | Kube-proxy is available as an EKS add-on and it maintains network rules on your nodes and enables network communication to your spark application pods | EKS kube-proxy Documentation |

| Amazon EBS CSI driver | Yes | EBS CSI driver is available as an EKS add-on and it allows EKS clusters to manage the lifecycle of EBS volumes | EBS CSI Driver Documentation |

| Amazon EFS CSI driver | Yes | The Amazon EFS Container Storage Interface (CSI) driver provides a CSI interface that allows Kubernetes clusters running on AWS to manage the lifecycle of Amazon EFS file systems. | EFS CSI Driver Documentation |

| Karpenter | Yes | Karpenter is nodegroup-less autoscaler that provides just-in-time compute capacity for spark applications on Kubernetes clusters | Karpenter Documentation |

| Cluster Autoscaler | Yes | Kubernetes Cluster Autoscaler automatically adjusts the size of Kubernetes cluster and is available for scaling nodegroups (such as core-node-group) in the cluster | Cluster Autoscaler Documentation |

| Cluster proportional autoscaler | Yes | This is responsible for scaling CoreDNS pods in your Kubernetes cluster | Cluster Proportional Autoscaler Documentation |

| Metrics server | Yes | Kubernetes metrics server is responsible for aggregating cpu, memory and other container resource usage within your cluster | EKS Metrics Server Documentation |

| Prometheus | Yes | Prometheus is responsible for monitoring EKS cluster including spark applications in your EKS cluster. We use Prometheus deployment for scraping and ingesting metrics into Amazon Managed Prometheus and Kubecost | Prometheus Documentation |

| Amazon Managed Prometheus | Yes | This is responsible for storing and scaling of EKS cluster and spark application metrics | Amazon Managed Prometheus Documentation |

| Kubecost | Yes | Kubecost is responsible for providing cost break down by Spark application. You can monitor costs based on per job, namespace or labels | EKS Kubecost Documentation |

| CloudWatch metrics | Yes | CloudWatch container insights metrics shows simple and standardized way to monitor not only AWS resources but also EKS resources on CloudWatch dashboard | CloudWatch Container Insights Documentation |

| AWS for Fluent-bit | Yes | This can be used to publish EKS cluster and worker node logs to CloudWatch Logs or 3rd party logging system | AWS For Fluent-bit Documentation |

| AWS Load Balancer Controller | Yes | The AWS Load Balancer Controller manages AWS Elastic Load Balancers for a Kubernetes cluster. | AWS Load Balancer Controller Documentation |

Prerequisites

Ensure that you have installed the following tools on your machine.

Deploying the Solution

Clone the repository

git clone https://github.com/awslabs/data-on-eks.git

Navigate into self-managed-airflow directory and run install.sh script

cd data-on-eks/schedulers/terraform/self-managed-airflow

chmod +x install.sh

./install.sh

Verify the resources

Create kubectl config

Update the placeholder for AWS region and run the below command.

mv ~/.kube/config ~/.kube/config.bk

aws eks update-kubeconfig --region <region> --name self-managed-airflow

Describe the EKS Cluster

aws eks describe-cluster --name self-managed-airflow

Verify the EFS PV and PVC created by this deployment

kubectl get pvc -n airflow

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

airflow-dags Bound pvc-157cc724-06d7-4171-a14d-something 10Gi RWX efs-sc 73m

kubectl get pv -n airflow

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-157cc724-06d7-4171-a14d-something 10Gi RWX Delete Bound airflow/airflow-dags efs-sc 74m

Verify the EFS Filesystem

aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text

Verify S3 bucket created for Airflow logs

aws s3 ls | grep airflow-logs-

Verify the Airflow deployment

kubectl get deployment -n airflow

NAME READY UP-TO-DATE AVAILABLE AGE

airflow-pgbouncer 1/1 1 1 77m

airflow-scheduler 2/2 2 2 77m

airflow-statsd 1/1 1 1 77m

airflow-triggerer 1/1 1 1 77m

airflow-webserver 2/2 2 2 77m

Fetch Postgres RDS password

Amazon Postgres RDS database password can be fetched from the Secrets manager

- Login to AWS console and open secrets manager

- Click on

postgressecret name - Click on Retrieve secret value button to verify the Postgres DB master password

Login to Airflow Web UI

This deployment creates an Ingress object with public LoadBalancer(internal # Private Load Balancer can only be accessed within the VPC) for demo purpose

For production workloads, you can modify airflow-values.yaml to choose internal LB. In addition, it's also recommended to use Route53 for Airflow domain and ACM for generating certificates to access Airflow on HTTPS port.

Execute the following command to get the ALB DNS name

kubectl get ingress -n airflow

NAME CLASS HOSTS ADDRESS PORTS AGE

airflow-airflow-ingress alb * k8s-dataengineering-c92bfeb177-randomnumber.us-west-2.elb.amazonaws.com 80 88m

The above ALB URL will be different for you deployment. So use your URL and open it in a browser

e.g., Open URL http://k8s-dataengineering-c92bfeb177-randomnumber.us-west-2.elb.amazonaws.com/ in a browser

By default, Airflow creates a default user with admin and password as admin

Login with Admin user and password and create new users for Admin and Viewer roles and delete the default admin user

Execute Sample Airflow Job

- Login to Airflow WebUI

- Click on

DAGslink on the top of the page. This will show dags pre-created by the GitSync feature - Execute the hello_world_scheduled_dag DAG by clicking on Play button (

>) - Verify the DAG execution from

Graphlink - All the Tasks will go green after few minutes

- Click on one of the green Task which opens a popup with log link where you can verify the logs pointing to S3

Airflow to run Spark workloads with Karpenter

👈Cleanup

👈To avoid unwanted charges to your AWS account, delete all the AWS resources created during this deployment